Homework 3

Due: April 16, 2018 @ 11:59pm

Instructions

For this homework assignment, you will practice using the SelectorGadget Chrome extension to find the CSS selectors needed to scrape information from a webpage and use the rvest package to scrape data from the official Mason Patriots sports website.

Obtain the Github repository you will use to complete homework 3 that contains a starter RMarkdown file named homework_3.Rmd, which you will use to do your work and write-up when completing the questions below. Remember to fill in your name at the top of the RMarkdown document and be sure to save, commit, and push (upload) frequently to Github so that you have incremental snapshots of your work. When you’re done, follow the How to submit section below to setup a Pull Request, which will be used for feedback.

Part 1 – SelectorGadget practice

One of the ways to target specific information on the webpage is through the use of CSS selectors, and becoming more comfortable with them will help you build more effective webscraping code. The SelectorGadget Chrome extension is a convenient tool for determining the CSS selectors you need for a webscraping task. For a refresher on how to use the extension, review the SelectorGadget vignette.

For this part of the homework, use the SelectorGadget tool to figure out the CSS selectors needed to scrape specific data from a linked page. You only need to report the CSS selectors needed to get the information and do not need to write rvest code to formally extract it, although you are welcome to write code to test the CSS selectors if you like.

-

Using the SelectorGadget tool, find the CSS selectors for the following information on the IMDB page for the television show The Office, https://www.imdb.com/title/tt0386676/:

-

Number of episodes

-

Certificate (TV Rating)

-

First five plot keywords

-

Genres

-

Runtime

-

Country

-

Language

-

-

Using the SelectorGadget tool, find the CSS selectors for the following information on the data.gov Data Catalog, https://catalog.data.gov/dataset:

-

Number of datasets found

-

Dataset names (example: Demographic Statistics By Zip Code)

-

Dataset organization (example: City of New York)

-

Dataset description (example: “Demographic statistics broken down by zip code”)

-

Dataset type (the ribbons on the upper-right of each row, for example: Federal, City)

-

Part 2 – Scraping Mason Patriots Scores

Webscrapers can be used for all kinds of purposes, such as building movie review databases, tracking prices for goods and services, and analyzing how a news story is reported on different news sites. Collecting sports data is another example, which can be used to quantify how valuable players are when putting together a fantasy sports team. Actual sports teams also employ statistical methods when drafting players and developing strategies, with Sabermetrics (depicted in the movie Moneyball) being one of the better-known examples.

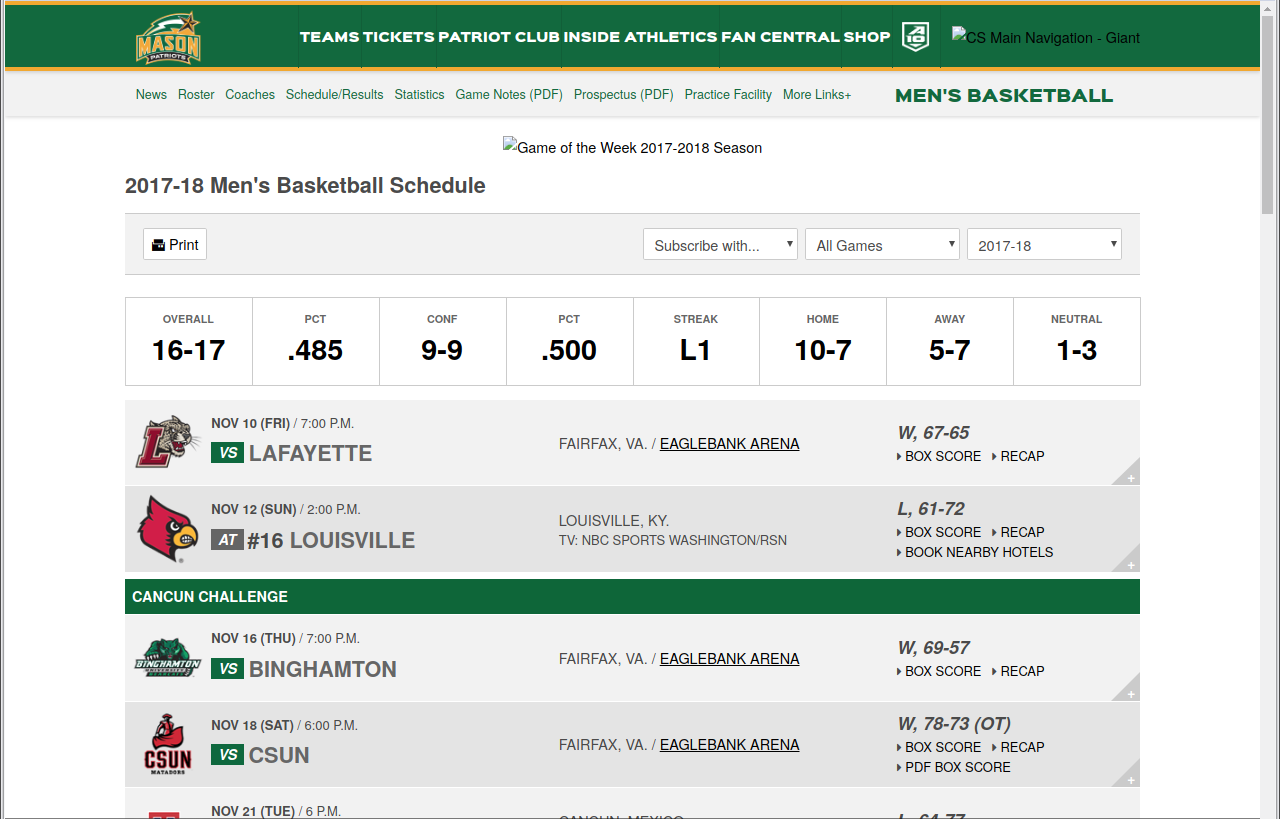

For this part of the homework, we will scrape the 2017 – 2018 season schedules and scores for the men’s and women’s basketball teams on the official Mason Patriots sports site. For reference, the 2017-2018 schedule and scores page for the men’s team should look like this:

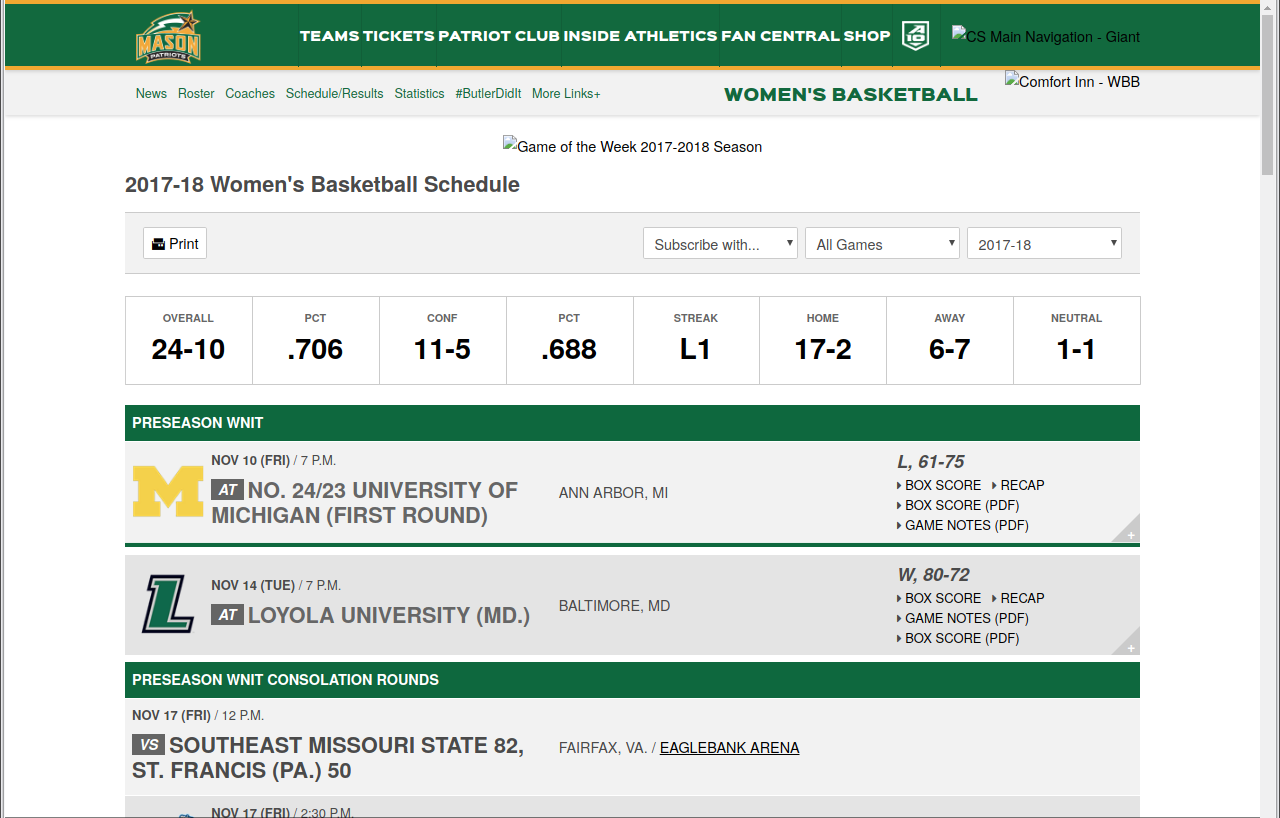

and the 2017 – 2018 schedule and scores page for the women’s team should look like this:

The following questions will guide you through the process of scraping this data. You are encouraged to review the examples provided in the Web scraping activity, the Class 16 slides, and the Class 19 slides while completing this part of the homework assignment.

Men’s basketball schedule and scores

-

To start, you need to load the men’s basketball schedule and scores page into R. Do this using one line of code, and assign the accessed page data to a variable called

mens_bb. -

Mason’s opponent for each game is listed on the left side of each row, just after a small box that says VS or AT. Use the SelectorGadget tool to determine the CSS selector needed to scrape this information, and then write the code that scrapes this information. Assign the scraped data to a variable called

mens_opponents. If done right,mens_opponentsshould be a character vector containing 33 teams. -

The location for each game is listed to the right of the opponent’s name. For games played in the United States it lists the city and state, for example “Fairfax, VA” is the location for home games. Use the SelectorGadget tool to determine the CSS selector needed to scrape this information, and then write the code that scrapes this information. Assign the scraped data to a variable called

mens_locations. -

The date for each game is listed above the opponent’s name, and has the format Month Day (Day of the Week). For example, the first listed game has the date Nov 10 (FRI). Use the SelectorGadget tool to determine the CSS selector needed to scrape this information, and then write the code that scrapes this information. Assign the scraped data to a variable called

mens_dates. -

The time for each game is listed to the right of the game date. For example, the first listed game has the time 7:00 P.M. Use the SelectorGadget tool to determine the CSS selector needed to scrape this information, and then write the code that scrapes this information. Assign the scraped data to a variable called

mens_times. -

The score for each game is listed on the right side of each row in the format Mason’s score-Opponent’s score. For example, the first listed game has the score 67-65. Use the SelectorGadget tool to determine the CSS selector needed to scrape this information, and then write the code that scrapes this information. Assign the scraped data to a variable called

mens_scores. Yourmens_scoresvector should only have 33 pieces of data in it, if it has more or less then you need to try another CSS selector. -

The W or L to the left of each game score indicates whether Mason won (W) or lost (L) the game. Use the SelectorGadget tool to determine the CSS selector needed to scrape this information, and then write the code that scrapes this information. You’ll note that for each game you’ll actually get W, or L,. Pipe (%>%) your scraped data into the

str_remove()function and tell it to get rid of the comma. Assign the scraped data to a variable calledmens_win_loss. -

Use the

data_frame()function to create a tibble containing your scraped data. The columns should have the following names and be in this order:- date

- time

- opponent

- location

- score

- win_loss

Assign the tibble to a variable called

mens_df. This table should have 33 rows.

Women’s basketball schedule and scores

-

The code you created for scraping the men’s basketball team schedule and score should also work on the page for the women’s team with minimal changes. Copy the code you wrote in the blocks for the men’s page and paste it here. Change the prefix of the variable names you assign each output into from

mens_towomens_, and the code so that it loads the women’s schedule and scores page. The final result should be a tibble assigned to a variable calledwomens_df, which has the following columns in this order:- date

- time

- opponent

- location

- score

- win_loss

This table should have 34 rows.

Quick data exploration

Collecting data doesn’t serve much of a purpose if we don’t explore or analyze it. Create the summary reports and visualizations requested below to help you better understand the data you just collected.

-

What was the average score for the men’s team (Mason only) when they won a game and when they lost a game? What was the average score for the women’s team (Mason only) when they won a game and when they lost a game?

Hint: To answer this, you will need to use the

separate()function. -

Plot the men’s histogram of scores and the women’s histogram of scores (just for the Mason teams, not the opponents), and then compare the two histograms. Which histogram is centered at a higher score? Which histogram has the larger spread? Are there any other notable differences?

How to submit

When you are ready to submit, be sure to save, commit, and push your final result so that everything is synchronized to Github. Then, navigate to your copy of the Github repository you used for this assignment. You should see your repository, along with the updated files that you just synchronized to Github. Confirm that your files are up-to-date, and then do the following steps:

-

Click the Pull Requests tab near the top of the page.

-

Click the green button that says “New pull request”.

-

Click the dropdown menu button labeled “base:”, and select the option

starting. -

Confirm that the dropdown menu button labeled “compare:” is set to

master. -

Click the green button that says “Create pull request”.

-

Give the pull request the following title: Submission: Homework 2, FirstName LastName, replacing FirstName and LastName with your actual first and last name.

-

In the messagebox, write: My homework submission is ready for grading @shuaibm @jkglasbrenner.

-

Click “Create pull request” to lock in your submission.

Cheatsheets

You are encouraged to review and keep the following cheatsheets handy while working on this assignment: